Let's Skip to the End with AI Regulation

Artificial Intelligence (AI) regulation is coming. As usual, there seems to be three camps: those who are cheering, those who are jeering, and the other 80% of the population that isn’t clear on what they’re arguing about. Regardless of where you stand on the topic, we can all agree that there are pros and cons to regulations. So rather than spend time arguing one side or the other, lets just try to thread the needle this time around and implement safety and security without halting innovation and adoption. If we don’t, all we have to do is look a few years in the past to get a look into the future of how this plays out.

Table of contents

Introduction

Artificial Intelligence (AI) regulation is coming. As usual, there seems to be three camps: those who are cheering, those who are jeering, and the other 80% of the population that isn’t clear on what they’re arguing about. Regardless of where you stand on the topic, we can all agree that there are pros and cons to regulations. So rather than spend time arguing one side or the other, lets just try to thread the needle this time around and implement safety and security without halting innovation and adoption. If we don’t, all we have to do is look a few years in the past to get a look into the future of how this plays out.

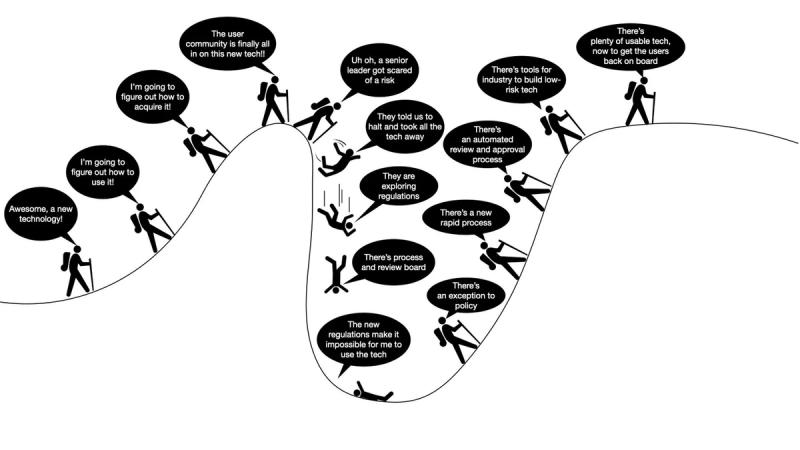

The Familiar Path of New Technology Adoption

In the context of the Federal government (which is our main focus here), when a new technology is introduced, it usually follows a predictable pattern: Discovery, Adoption, Regulation, Regulatory Winter (a period where the the tech is frozen while the policy wonks figure out the regulations), Adaptation around Regulation, and finally, Re-adoption. How do we know? Let's look at how the Department of Defense went about adopting off-the-shelf drones. In the early days, right after DJI and others of their ilk showed the consumer market that cheap, fun, useful drones were within their reach it shot to the top of everyone's Christmas list and every operator's IPL, STIPL, and UFR list. Shortly thereafter all the cool kids (Special Operations in particular) had pocket-sized quad copters buzzing low and fast from back yards to Bagram.

Then what happened? The policy and security folks started justifiably ringing alarms about "phoning home" to China, the Department reacted, threw down policies to cover the risk, and disallowed any drone that didn't meet a new requirements - which was all of them. (cue screeching halt sounds) Then slowly, progressively, super cool kids got exceptions to policy with deity-level approval authority. Those were followed by organizations like DIU being charged with coming up with compliant drones and setting standards. Now, several years later, an operational end user can purchase a moderately priced off-the-shelf drone. Not DJI cheap, but less than the $250k+ that most small military drones run. This pattern isn't isolated, it happened with smart phones before drones, and it will happen again.

The Inevitability of AI Regulation

While we may have differing viewpoints regarding the appropriate timing and extent of AI regulation, it is undeniable that a discernible pattern has emerged. Artificial intelligence (AI), once a burgeoning capability quietly developing in the background, has recently catapulted into the forefront of public awareness with the advent of ChatGPT. This groundbreaking language model quickly became a household name, capturing widespread attention and sparking conversations around its potential implications. The immense popularity and impact of ChatGPT served as a wake-up call to various institutions across the United States, prompting swift reactions from key players such as the White House, Capitol Hill, and even the Pentagon. Recognizing the need to address this rapidly advancing technology comprehensively and effectively, government agencies at all levels are now scrambling to catch up with what some might consider an AI revolution.

This sudden surge in interest from policymakers underscores both the transformative power of AI technologies like ChatGPT and their potential risks if left unregulated or unchecked. As society grapples with these novel challenges posed by increasingly sophisticated AI systems, debates surrounding when and how to regulate them have gained significant traction. Opinions on this matter diverge widely among experts, scholars, lawmakers, industry leaders, advocacy groups – essentially anyone invested in shaping our technological future. Some argue for immediate regulatory measures to ensure ethical use while mitigating any detrimental impacts on privacy or human rights. They emphasize proactive oversight as essential given AI's rapid evolution and ever-expanding capabilities. They know they have to do something, but WHAT is still unclear. Looking at the history of small drones and smartphones offers us insights into how this might play out. All else being equal, AI is headed for a gully of halted progress.

Beware of Experts with Agendas

Adding to the complication and confusion, we have non-AI the industry leaders calling for a halt to AI development and AI industry leaders from the likes of OpenAI, Google, Anthropic, ScaleAI, and Microsoft urging AI regulation with dire warnings that border on dystopian. Logically these are the people we should listen to, those who are closest to the science and know first-hand the potential downsides to the technology they are developing, Surely if these techno-barons are begging for the government to step in then things must be serious. Perhaps.

What is more likely is that the growing pace of open source advancement in AI is eroding their near monopolistic market position and a few well-crafted regulations would shut out the academics, upstarts and garage hackers that are freely threatening their position. The concept of "regulatory capture" is well known and documented in industries like transportation and energy. Raising regulations may increase the costs for the current market leaders and hurt profits a little, but shutting out new entrants more than makes up for it. Imagine if Kodak invested a little more time on The Hill pushing regulations on camera technology, they might still be a market leader.

But if we have to look on these industry experts with suspicion, who can we trust?

Let's War Game AI Regulation

War gaming refers to a strategic planning method used primarily in military and business contexts. It involves creating a hypothetical scenario, often a conflict or challenge, and exploring how different actors might respond. The aim is to anticipate potential outcomes, identify weaknesses or blind spots in strategies, and develop more robust plans. In the context of artificial intelligence (AI), war gaming can help stakeholders predict potential problems that might arise due to new regulations, technological developments, or shifts in the competitive landscape, and to proactively design solutions.

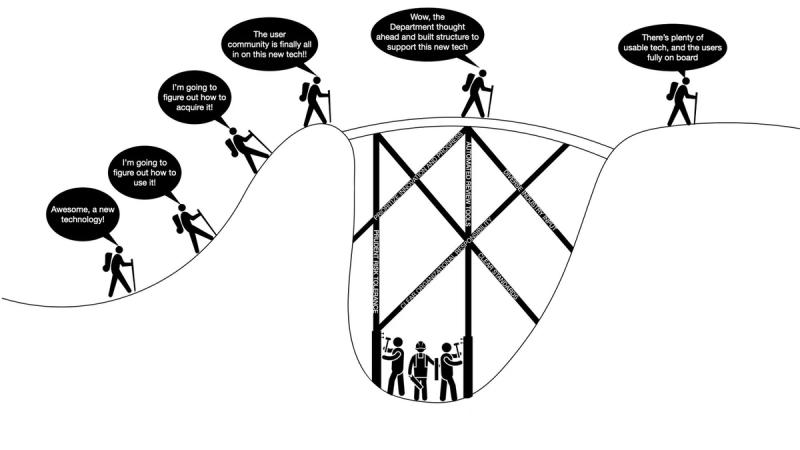

In the context of future AI regulations, 'war gaming' can serve as a strategic tool to identify potential problems that new regulations might introduce and to proactively design solutions. This approach involves simulating various regulatory scenarios to anticipate potential impacts and responses. By playing out these scenarios, policymakers and AI developers can identify potential regulatory bottlenecks, conflicts, or operational challenges that may arise. This anticipatory approach allows for the proactive development of tools and structures that consider these potential regulations, facilitating smoother transitions and reducing the likelihood of disruptive surprises. This practice of war gaming regulations in AI can lead to more resilient and adaptable AI systems, and a more comprehensive and forward-looking regulatory framework.

Lets look at the drone example and build the processes, structures, systems, responsibilities, automated pipelines, etc before we need them. Let's implement policy and tools at the same time rather than sequentially so that the net result is that there is not halt in progress, only a reduction in risks and negative externalities.

Call to Action

While the default path of AI regulation might be familiar and inevitable, we should strive not just to react but anticipate and prepare proactively. Government agencies should lead efforts to shortcut the regulatory winter by fostering dialogue between technology innovators and regulators.

The AI.gov initiative, while commendable in its efforts, appears to primarily cater to the interests of researchers affiliated with the national science foundation. While it does offer valuable information such as a comprehensive list of ongoing AI research programs, there is a need for broader inclusivity and involvement from various stakeholders.

It is worth noting that individual departments within the government have taken notable steps towards embracing artificial intelligence. The Department of Defense's Chief Data Analytics Officer (CDAO) program, the National Institutes of Health's Bridge2AI initiative, and the Department of Health and Human Services' Office of the Chief Artificial Intelligence Officer (OCAIO) are all positive developments within executive branch agencies.

However, to effectively regulate AI technologies and ensure responsible deployment across sectors, it is crucial for these departments - along with other relevant entities - to proactively engage in war gaming their regulations. By actively anticipating potential challenges and risks associated with AI implementation before they arise, policymakers can establish robust frameworks that promote innovation while safeguarding against potential harm.

This call to action emphasizes the importance of taking proactive measures rather than merely reacting after issues have already arisen. It urges governmental bodies to conduct honest and realistic policy planning exercises where they simulate different scenarios related to AI regulation. Through this process, decision-makers can identify gaps or weaknesses in existing policies and develop solutions accordingly.

By approaching AI regulation from a proactive standpoint informed by worst-case scenario planning, government agencies can foster an environment conducive to responsible innovation while ensuring public safety and ethical considerations are upheld throughout every adoption journey.

Sign up for Rogue today!

Get started with Rogue and experience the best proposal writing tool in the industry.