Module 5.d: AI For Capture Prospecting - Part 1

Intro

Generative AI is rapidly becoming an invaluable tool in both the public and private sectors. It's akin to a highly skilled analyst who can rapidly make sense of vast and disparate data sources. Let's unpack that.

In government settings, collecting and analyzing data can often resemble assembling a complex jigsaw puzzle with pieces scattered in different departments. Whether it's for infrastructure development, policy planning, or public health initiatives, the data is usually multi-faceted. Without a consolidated view, the decision-making process becomes protracted and less effective.

Switching gears to the industry perspective, the challenges are somewhat parallel. Companies, especially those in emerging markets, need to identify trends and opportunities that can be like finding a needle in a haystack. This involves trawling through Long Range Acquisition Estimates, Integrated Priority Lists, Strategic Plans, Broad Agency Announcements.

Interestingly, the challenges faced by government and industry aren't isolated; they’re interrelated. Government priorities affect business environments and vice versa. A lapse in data-driven decision-making in one sector invariably impacts the other. It's a symbiotic relationship that requires a streamlined approach to data handling for both to function optimally.

Generative AI is transforming this landscape. It can take these fragmented pieces of information, analyzes them, and generates actionable insights. For the government, this means that policy decisions can be made more efficiently, backed by comprehensive data. For businesses, it allows for quicker identification of market opportunities or operational inefficiencies. Generative AI serves as a force multiplier. It doesn’t just replace manual effort; it enhances the quality of output. It’s like having a colleague who can quickly make connections that would take others hours to figure out.

I. Data Finding and Extraction

Let’s use a recent and relevant use case: Artificial Intelligence. Recently the National AI Initiative published an inventory of AI Use Cases by Executive Branch Agency. Whether you’re government or industry, analyzing this list is very important, why?

Government Acquisition Pros: you need to know what your customers are looking to buy.

Industry Developers: you need to know what the government is looking to buy, and what you need. to sell.

We could talk about data scraping here, but frankly, we’re going to do the first part the hard way, we’ll just go get the use cases since ai.gov was so kind to compile them for us.

Prompt:

- Imagine you are a government data analyst

- you have a critical task from your supervisor

- each federal agency did a check of their current uses of artificial intelligence

they published these use cases in various formats, some listed, some in PDF, some in excel

what I want you do is first extract and compile all of the use cases into easily understandable json format

then I want you to analyze the use cases to identify use cases to identify those that are semantically similar and those that are absolutely unique

We are going to do this step by step

Step 1 I am going to provide you with several documents

Step 2 you are going to extract all of the contents and format them into a compiled json format

Step 3 you are going to analyze the unified json for semantic similarities in the described uses cases

Step 4 you are going to categorize the use cases as similar, unique, or other don't move from step to step without my approval, ok?

- here's the use cases from nasa

2023_nasa_use_cases_for_ostp_may_2022_anonymous_tagged.pdf

- here's the use cases from department of interior

2023-agency-inventory-of-ai-use-cases-for-doigovdata.xlsx

- here's the use cases from department of homeland security

23_0726_ocio_dhs-inventory-of-ai-use-cases.xlsx

- here's the use cases from department of health and human services

hhs-ai-use-cases-2023-public-inventory.csv

- here are the use cases from department of veterans affairs:

VA Use Cases in Plain Text

In accordance with the directives of this EO, the VA has published the following AI use cases:

AI use case name

Summary

1

Artificial Intelligence physical therapy app

This app is a physical therapy support tool. It is a data source agnostic tool which takes input from a variety of wearable sensors and then analyzes the data to give feedback to the physical therapist in an explainable format.

2

Artificial intelligence coach in cardiac surgery

The artificial intelligence coach in cardiac surgery infers misalignment in team members’ mental models during complex healthcare task execution. Of interest are safety-critical domains (e.g., aviation, healthcare), where lack of shared mental models can lead to preventable errors and harm. Identifying model misalignment provides a building block for enabling computer-assisted interventions to improve teamwork and augment human cognition in the operating room.

3

AI Cure

AICURE is a phone app that monitors adherence to orally prescribed medications during clinical or pharmaceutical sponsor drug studies.

4

Acute kidney injury (AKI)

This project, a collaboration with Google DeepMind, focuses on detecting acute kidney injury (AKI), ranging from minor loss of kidney function to complete kidney failure. The artificial intelligence can also detect AKI that may be the result of another illness.

5

Assessing lung function in health and disease

Health professionals can use this artificial intelligence to determine predictors of normal and abnormal lung function and sleep parameters.

6

Automated eye movement analysis and diagnostic prediction of neurological disease

Artificial intelligence recursively analyzes previously collected data to both improve the quality and accuracy of automated algorithms, as well as to screen for markers of neurological disease (e.g. traumatic brain injury, Parkinson's, stroke, etc).

7

Automatic speech transcription engines to aid scoring neuropsychological tests.

Automated speech transcription engines analyze the cognitive decline of older VA patients. Digitally recorded speech responses are transcribed using multiple artificial intelligence-based speech-to-text engines. The transcriptions are fused together to reduce or obviate the need for manual transcription of patient speech in order to score the neuropsychological tests.

8

CuraPatient

CuraPatient is a remote tool that allows patients to better manage their conditions without having to see a provider. Driven by artificial intelligence, it allows patients to create a profile to track their health, enroll in programs, manage insurance, and schedule appointments.

9

Digital command center

The Digital Command Center seeks to consolidate all data in a medical center and apply predictive prescriptive analytics to allow leaders to better optimize hospital performance.

10

Disentangling dementia patterns using artificial intelligence on brain imaging and electrophysiological data

This collaborative effort focuses on developing a deep learning framework to predict the various patterns of dementia seen on MRI and EEG and explore the use of these imaging modalities as biomarkers for various dementias and epilepsy disorders. The VA is performing retrospective chart review to achieve this.

11

Machine learning (ML) for enhanced diagnostic error detection and ML classification of protein electrophoresis text

Researchers are performing chart review to collect true/false positive annotations and construct a vector embedding of patient records, followed by similarity-based retrieval of unlabeled records "near" the labeled ones (semi-supervised approach). The aim is to use machine learning as a filter, after the rules-based retrieval, to improve specificity. Embedding inputs will be selected high-value structured data pertinent to stroke risk and possibly selected prior text notes.

12Behavidence

Behavidence is a mental health tracking app. Veterans download the app onto their phone and it compares their phone usage to that of a digital phenotype that represents people with confirmed diagnosis of mental health conditions.

13

Machine learning tools to predict outcomes of hospitalized VA patients

This is an IRB-approved study which aims to examine machine learning approaches to predict health outcomes of VA patients. It will focus on the prediction of Alzheimer's disease, rehospitalization, and Chlostridioides difficile infection.

14

Nediser reports QA

Nediser is a continuously trained artificial intelligence “radiology resident” that assists radiologists in confirming the X-ray properties in their radiology reports. Nediser can select normal templates, detect hardware, evaluate patella alignment and leg length and angle discrepancy, and measure Cobb angles.

15

Precision medicine PTSD and suicidality diagnostic and predictive tool

This model interprets various real time inputs in a diagnostic and predictive capacity in order to forewarn episodes of PTSD and suicidality, support early and accurate diagnosis of the same, and gain a better understanding of the short and long term effects of stress, especially in extreme situations, as it relates to the onset of PTSD.

16

Prediction of Veterans' Suicidal Ideation following Transition from Military Service

Machine learning is used to identify predictors of veterans' suicidal ideation. The relevant data come from a web-based survey of veterans’ experiences within three months of separation and every six months after for the first three years after leaving military service.

17

PredictMod

PredictMod uses artificial intelligence to determine if predictions can be made about diabetes based on the gut microbiome.

18

Predictor profiles of OUD and overdose

Machine learning prediction models evaluate the interactions of known and novel risk factors for opioid use disorder (OUD) and overdose in Post-9/11 Veterans. Several machine learning classification-tree modeling approaches are used to develop predictor profiles of OUD and overdose.

19

Provider directory data accuracy and system of record alignment

AI is used to add value as a transactor for intelligent identity resolution and linking. AI also has a domain cache function that can be used for both Clinical Decision Support and for intelligent state reconstruction over time and real-time discrepancy detection. As a synchronizer, AI can perform intelligent propagation and semi-automated discrepancy resolution. AI adapters can be used for inference via OWL and logic programming. Lastly, AI has long term storage (“black box flight recorder”) for virtually limitless machine learning and BI applications.

20

Seizure detection from EEG and video

Machine learning algorithms use EEG and video data from a VHA epilepsy monitoring unit in order to automatically identify seizures without human intervention.

21

SoKat Suicidial Ideation Detection Engine

The SoKat Suicide Ideation Engine (SSIE) uses natural language processing (NLP) to improve identification of Veteran suicide ideation (SI) from survey data collected by the Office of Mental Health (OMH) Veteran Crisis Line (VCL) support team (VSignals).

22

Using machine learning to predict perfusionists’ critical decision-making during cardiac surgery

A machine learning approach is used to build predictive models of perfusionists’ decision-making during critical situations that occur in the cardiopulmonary bypass phase of cardiac surgery. Results may inform future development of computerized clinical decision support tools to be embedded into the operating room, improving patient safety and surgical outcomes.

23

Gait signatures in patients with peripheral artery disease

Machine learning is used to improve treatment of functional problems in patients with peripheral artery disease (PAD). Previously collected biomechanics data is used to identify representative gait signatures of PAD to 1) determine the gait signatures of patients with PAD and 2) the ability of limb acceleration measurements to identify and model the meaningful biomechanics measures from PAD data.

24

Medication Safety (MedSafe) Clinical Decision Support (CDS)

Using VA electronic clinical data, the Medication Safety (MedSafe) Clinical Decision Support (CDS) system analyzes current clinical management for diabetes, hypertension, and chronic kidney disease, and makes patient-specific, evidence-based recommendations to primary care providers. The system uses knowledge bases that encode clinical practice guideline recommendations and an automated execution engine to examine multiple comorbidities, laboratory test results, medications, and history of adverse drug events in evaluating patient clinical status and generating patient-specific recommendations

25

Prediction of health outcomes, including suicide death, opioid overdose, and decompensated outcomes of chronic diseases.

Using electronic health records (EHR) (both structured and unstructured data) as inputs, this tool outputs deep phenotypes and predictions of health outcomes including suicide death, opioid overdose, and decompensated outcomes of chronic diseases.

26

VA-DoE Suicide Exemplar Project

The VA-DoE Suicide Exemplar project is currently utilizing artificial intelligence to improve VA's ability to identify Veterans at risk for suicide through three closely related projects that all involve collaborations with the Department of Energy.

27

Machine learning models to predict disease progression among veterans with hepatitis C virus

A machine learning model is used to predict disease progression among veterans with hepatitis C virus.28Prediction of biologic response to thiopurines

Using CPRS and CDW data, artificial intelligence is used to predict biologic response to thiopurines among Veterans with irritable bowel disease.

29

Predicting hospitalization and corticosteroid use as a surrogate for IBD flares

This work examines data from 20,368 Veterans Health Administration (VHA) patients with an irritable bowel disease (IBD) diagnosis between 2002 and 2009. Longitudinal labs and associated predictors were used in random forest models to predict hospitalizations and steroid usage as a surrogate for IBD Flares.

30

Predicting corticosteroid free endoscopic remission with Vedolizumab in ulcerative colitis

This work uses random forest modeling on a cohort of 594 patients with Vedolizumab to predict the outcome of corticosteroid-free biologic remission at week 52 on the testing cohort. Models were constructed using baseline data or data through week 6 of VDZ therapy.

31

Use of machine learning to predict surgery in Crohn’s disease

Machine learning analyzes patient demographics, medication use, and longitudinal laboratory values collected between 2001 and 2015 from adult patients in the Veterans Integrated Service Networks (VISN) 10 cohort. The data was used for analysis in prediction of Crohn’s disease and to model future surgical outcomes within 1 year.

32

Reinforcement learning evaluation of treatment policies for patients with hepatitis C virus

A machine learning model is used to predict disease progression among veterans with hepatitis C virus.

33

Predicting hepatocellular carcinoma in patients with hepatitis C

This prognostic study used data on patients with hepatitis C virus (HCV)-related cirrhosis in the national Veterans Health Administration who had at least 3 years of follow-up after the diagnosis of cirrhosis. The data was used to examine whether deep learning recurrent neural network (RNN) models that use raw longitudinal data extracted directly from electronic health records outperform conventional regression models in predicting the risk of developing hepatocellular carcinoma (HCC).

34

Computer-aided detection and classification of colorectal polyps

This study is investigating the use of artificial intelligence models for improving clinical management of colorectal polyps. The models receive video frames from colonoscopy video streams and analyze them in real time in order to (1) detect whether a polyp is in the frame and (2) predict the polyp's malignant potential.

35

GI Genius (Medtronic)

The Medtronic GI Genius aids in detection of colon polyps through artificial intelligence.

36

Extraction of family medical history from patient records

This pilot project uses TIU documentation on African American Veterans aged 45-50 to extract family medical history data and identify Veterans who are are at risk of prostate cancer but have not undergone prostate cancer screening.

37

VA /IRB approved research study for finding colon polyps

This IRB approved research study uses a randomized trial for finding colon polyps with artifical intelligence.

38

Interpretation/triage of eye images

Artificial intelligence supports triage of eye patients cared for through telehealth, interprets eye images, and assesses health risks based on retina photos. The goal is to improve diagnosis of a variety of conditions, including glaucoma, macular degeneration, and diabetic retinopathy.

40

Screening for esophageal adenocarcinoma

National VHA administrative data is used to adapt tools that use electronic health records to predict the risk for esophageal adenocarcinoma.

41

Social determinants of health extractor

AI is used with clinical notes to identify social determinants of health (SDOH) information. The extracted SDOH variables can be used during associated health related analysis to determine, among other factors, whether SDOH can be a contributor to disease risks or healthcare inequality.

- here's the use cases from department of state:

DoS Use Cases in Plain Language

BUREAU AI USE CASE NAME PUBLIC SUMMARY

GPA Facebook Ad Test Optimization System GPA’s production media collection and analysis system that pulls data from half a dozen different open and commercial media clips services to give an up-to-date global picture of media coverage around the world.

GPA Global Audience Segmentation Framework A prototype system that collects and analyzes the daily media clips reports from about 70 different Embassy Public Affairs Sections.

GPA Machine-Learning Assisted Measurement and Evaluation of Public Outreach

GPA’s production system for collecting, analyzing, and summarizing the global digital content footprint of the Department.

GPA GPATools and GPAIX GPA’s production system for testing potential messages at scale across segmented foreign sub-audiences to determine effective outreach to target audiences.

F NLP for Foreign Assistance Appropriations Analysis Natural language processing application for F/RA to streamline the extraction of earmarks and directives from the annual appropriations bill. Before NLP this was an entirely manual process.

R Optical Character Recognition – text extraction Extract text from images using standard python libraries; inputs have been websites to collect data

R Topic Modeling Cluster text into themes based on frequency of used words in documents; has been applied to digital media articles as well as social media posts; performed using available Python libraries

R forecasting using statistical models, projecting expected outcome into the future; this has been applied to COVID cases as well as violent events in relation to tweets

R Deepfake Detector Deep learning model that takes in an image containing a person’s face and classifies the image as either being real (contains a real person’s face) or fake (synthetically generated face, a deepfake often created using Generative Adversarial Networks).

R SentiBERTIQ GEC A&R uses deep contextual AI of text to identify and extract subjective information within the source material. This sentiment model was trained by fine-tuning a multilingual, BERT model leveraging word embeddings across 2.2 million labeled tweets spanning English, Spanish, Arabic, and traditional and simplified Chinese. The tool will assign a sentiment to each text document and output a CSV containing the sentiment and confidence interval for user review.

R TOPIQ GEC A&R’s TOPIQ tool automatically classifies text into topics for analyst review and interpretation. The tool uses Latent Dirichlet Allocation (LDA), a natural language processing technique that uncovers a specified number of topics from a collection of documents, and then assigns the probability that each document belongs to a topic.

R Text Similarity GEC A&R’s Text Similarity capability identified different texts that are identical or nearly identical by calculating cosine similarity between each text. Texts are then grouped if they share high cosine similarity and then available for analysts to review further.

R Image Clustering Uses a pretrained deep learning model to generate image embeddings, then uses hierarchical clustering to identify similar images.

R Louvain Community Detection Takes in a social network and clusters nodes together into “communities” (i.e., similar nodes are grouped together)

A Federal Procurement Data System (FPDS) Auto-Populate Bot A/LM collaborated with A/OPE to develop a bot to automate the data entry in the Federal Procurement Data System (FPDS), reducing the burden on post’s procurement staff and driving improved compliance on DATA Act reporting. This bot is now used to update ~300 FPDS awards per week. A/LM also partnered with WHA to develop a bot to automate closeout reminders for federal assistance grants nearing the end of the period of performance and begin developing bots to automate receiving report validation and customer service inbox monitoring.

A Product Service Code Automation ML Model

A/LM developed a machine learning model to scan unstructured, user entered procurement data such as Requisition Title and Line Descriptions to automatically detect the commodity and services types being purchased for enhanced procurement categorization.

A Tailored Integration Logistics Management System (ILMS) User Analytics A/LM plans to use available ILMS transactional data and planned transactions to develop tailored user experiences and analytics to meet the specifics needs of the user at that moment. By mining real system actions and clicks we can extract more meaningful information about our users to simplify their interactions with the system and reduce time to complete their daily actions.

A Supply Chain Fraud and Risk Models A/LM plans to expand current risk analytics through development of AI/ML models for detecting anomalous activity within the Integrated Logistics Management System (ILMS) that could be potential fraud or malfeasance. The models will expand upon existing risk models and focus on key supply chain functions such as: Asset Management, Procure-to-Pay, and Fleet Management.

A Tailored Integration Logistics Management System (ILMS) Automated User Support Bot ILMS developed and deployed an automated support desk assistant using ServiceNow Virtual Agent to simplify support desk interactions for ILMS customers and to deflect easily resolved issues from higher cost support desk agents.

PM NLP to pull key information from unstructured text Use NLP to extract information such as country names and agreement dates from dozens of pages of unstructured pdf document

PM K-Means clustering into tiers Cluster countries into tiers based off data collected from open source and bureau data using k-means clustering

CSO Automated Burning Detection The Village Monitoring System program uses AI and machine learning to conduct daily scans of moderate resolution commercial satellite imagery to identify anomalies using the near-infrared band.

CSO Automated Damage Assessments The Conflict Observatory program uses AI and machine learning on moderate and high-resolution commerical satellite imagery to document a variety of war crimes and other abuses in Ukraine, including automated damage assessments of a variety of buildings, including critical infrastructure, hospitals, schools, crop storage facilities.

A Within Grade Increase Automation A Natural Language Processing (NLP) model is used in coordination with Intelligent Character Recognition (ICR) to identify and extract values from the JF-62 form for within grade increase payroll actions. Robotic Process Automation (RPA) is then used to validate the data against existing reports, then create a formatted file for approval and processing.

A Verified Imagery Pilot Project The Bureau of Conflict and Stabilization Operations ran a pilot project to test how the use of a technology service, Sealr, could verify the delivery of foreign assistance to conflict-affected areas where neither U.S. Department of State nor our implementing partner could go. Sealr uses blockchain encryption to secure photographs taken on smartphones from digital tampering. It also uses artificial intelligence to detect spoofs, like taking a picture of a picture of something. Sealr also has some image recognition capabilities. The pilot demonstrated technology like Sealr can be used as a way to strengthen remote monitoring of foreign assistance to dangerous or otherwise inaccessible areas.

A Conflict Forecasting CSO/AA is developing a suite of conflict and instability forecasting models that use open-source political, social, and economic datasets to predict conflict outcomes including interstate war, mass mobilization, and mass killings. The use of AI is confined to statistical models including machine learning techniques including tree-based methods, neural networks, and clustering approaches.

CGFS Automatic Detection of Authentic Material The Foreign Service Institute School of Language Studies is developing a tool for automated discovery of authentic native language texts classified for both topic and Interagency Language Roundtable (ILR) proficiency level to support foreign language curriculum and language testing kit development.

CSO ServiceNow AI-Powered Virtual Agent (Chatbot) IRM’s BMP Systems is planning to incorporate ServiceNow’s Virtual Agent into our existing applications to connect users with support and data requests. The Artificial Intelligence (AI) is provided by ServiceNow as part of their Platform as a Service (PaaS).

CSO Apptio Working Capital Fund (IRM/WCF) uses Apptio to bill bureaus for consolidated services run from the WCF. Cost models are built in Apptio so bureaus can budget for the service costs in future FYs. Apptio has the capability to extrapolate future values using several available formulas.

FSI eRecords M/L Metadata Enrichment The Department’s central eRecords archive leverages machine learning models to add additional metadata to assist with record discovery and review. This includes models for entity extraction, sentiment analysis, classification and identifying document types.

IRM AI Capabilities Embedded in SMART Models have been embedded in the backend of the SMART system on OpenNet to perform entity extraction of objects within cables, sentiment analysis of cables, keyword extraction of topics identified within cables, and historical data analysis to recommend addressees and passlines to users when composing cables.

IRM Crisis Campaign Cable Analytics Use optical character recognition and natural language processing on Department cables in order to evaluate gaps and trends in crisis training and bolster post preparedness for crisis events.

IRM Fast Text Word Builder Fast Text is an AI approach to identifying similar terms and phrases based off a root word. This support A&R’s capability to build robust search queries for data collection.

IRM Behavioral Analytics for Online Surveys Test (Makor Analytics) GEC executes a Technology Testbed to rapidly test emerging technology applications against foreign disinformation and propaganda challenges. GEC works with interagency and foreign partners to understand operational threats they’re facing and identifies technological countermeasures to apply against the operational challenge via short-duration tests of promising technologies. Makor Analytics is an AI quantitative research company that helps clients understand their audience’s perceptions and feelings helping to mitigate some of the limitations in traditional survey research. Makor Analytics’ proprietary behavioral analytics technology was developed to uncover true convictions and subtle emotions adding additional insights into traditional online survey results. The desired outcome of the pilot is a report analyzing the survey responses using behavioral analytics to provide target audience sentiment insights and subsequent recommendations. By leveraging AI behavioral analytics, the pilot aims to provide additional information beyond self-reported data that reflects sentiment analysis in the country of interest.

M/SS Crisis Campaign Cable Analytics Use optical character recognition and natural language processing on Department cables in order to evaluate gaps and trends in crisis training and bolster post preparedness for crisis events.

R Fast Text Word Builder Fast Text is an AI approach to identifying similar terms and phrases based off a root word. This support A&R’s capability to build robust search queries for data collection.

R Behavioral Analytics for Online Surveys Test (Makor Analytics) GEC executes a Technology Testbed to rapidly test emerging technology applications against foreign disinformation and propaganda challenges. GEC works with interagency and foreign partners to understand operational threats they’re facing and identifies technological countermeasures to apply against the operational challenge via short-duration tests of promising technologies. Makor Analytics is an AI quantitative research company that helps clients understand their audience’s perceptions and feelings helping to mitigate some of the limitations in traditional survey research. Makor Analytics’ proprietary behavioral analytics technology was developed to uncover true convictions and subtle emotions adding additional insights into traditional online survey results. The desired outcome of the pilot is a report analyzing the survey responses using behavioral analytics to provide target audience sentiment insights and subsequent recommendations. By leveraging AI behavioral analytics, the pilot aims to provide additional information beyond self-reported data that reflects sentiment analysis in the country of interest.

- please proceed to step 2

- yes, please take your time and be thorough, I have plenty of time for this task, so accuracy is more important than speed, take a deep breath and begin

- at this point you’re going to tell the chat yes a bunch of times

- yes please do, this time just keep the agency, sub organization, and use case statement or summary, discard the rest, and rather than structure them into json, structure them into a csv, that should be easier

- at this point you’re going to tell the chat yes a bunch of times

- good, now analyze the csv for semantically or directly similar use cases, analyze both the use case names and descriptions. add a column to the csv and categorize each use case as similar or unique, for the use cases that are unique add a reference to the row in which the similar use case is listed

Output File:

Walkthrough

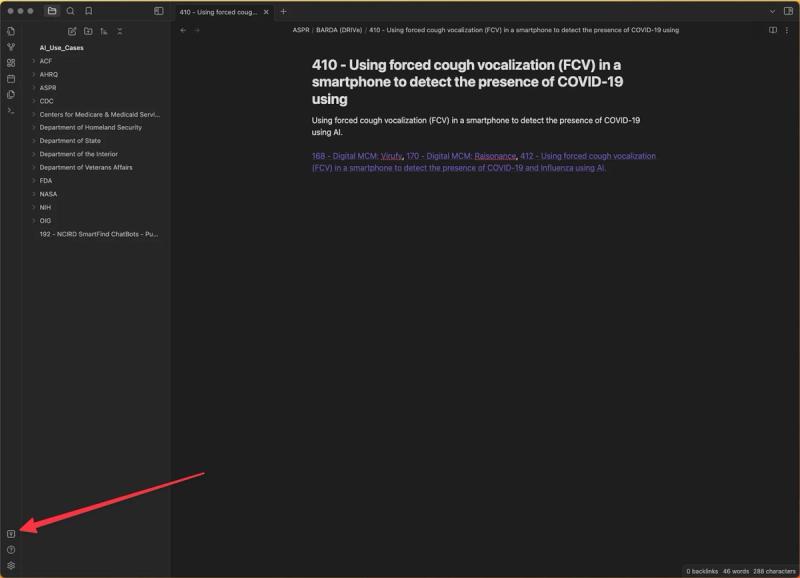

II. Data Formatting and Display

Cool, so turned a bunch of different files and formats into a single spreadsheet, this by itself is super useful, but lets see if we can make it more a interactive format.

Input File:

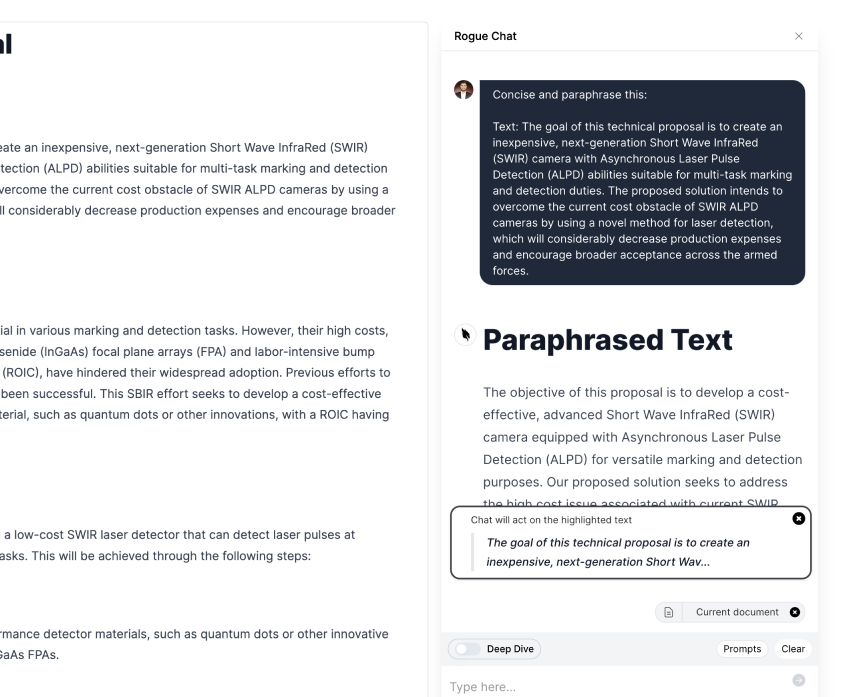

Prompt:

- I have a csv file that I want to convert into a compilation of .md files To do this you will need to create a file structure that you will ultimately provide to me as a zip file The zip file will be titled "AI Use Cases", this will be the top-level file The cvs file has a column titled "Agency", create one file subordinate file for each agency The file has a column titled "Subagency", create one folder for each subagency and store them in their respective agency folder, records without a subagency should be consolidated under their respective Agency folder The file contains a column titled 'Use Case Name', each use case name should be a separate .md file each record has date in the column titled 'summary of use case' that should be text stored as text in the respective .md file There is also a field titled "similar to" that references related records, I would like these converted to links to accomplish this task I want you to go step by step:

- take the row number for each use case and append that number to the beginning of each Use Case Title

- Create a new field called "Reference" and copy the new appended Use Case Title text that is referenced by the numbers in the "Similar to" field for each record that has number in the "Similar To" field

- The text in the new "Reference" field is what should be converted to double bracket links like this example [[text]], and included at the end of each respective text section

Output File:

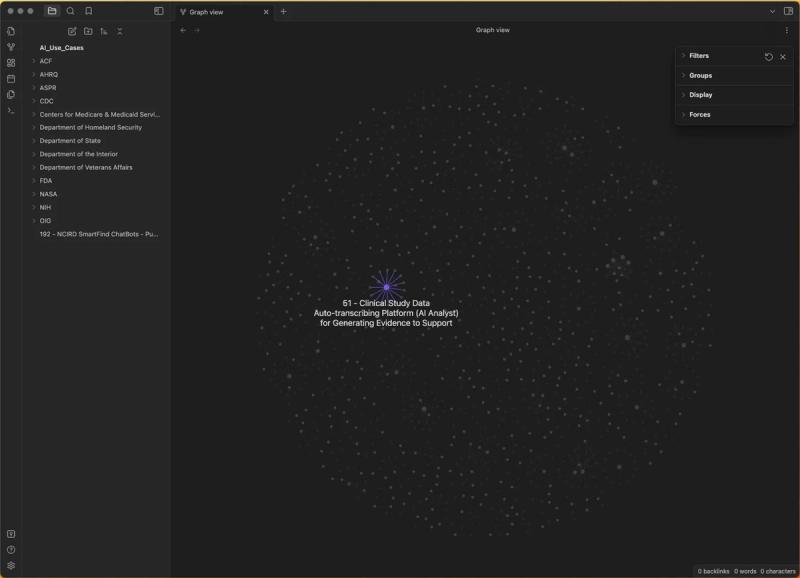

How to View in a Graph

- Install Obsidian (it’s free)

- Unzip the output file

Open the unzipped file as a new vault

Check it out, now you can visually see the connections between different use cases.

Government Acquisition Pros: you can see where co-investment with other agencies would be advantageous.

Industry Developers: you can see where you can sell the same products and services to multiple agencies.

Walkthrough:

https://www.youtube.com/watch?v=oLDdnmgyEFE

III. Turning Data into Ideas

Now you have some common use cases across multiple agencies, now what do you do about them? What kinds of products or services could you buy or sell to deliver on those use cases?Let’s use AI to find some ideas for solutions…

Input File:

Prompt:

- I want to go back and analyze the csv file, I want you to find the records that have two or more listed similarities in the "similar to" column and just list them here, can you do that?

- now take this csv file and list out the use cases one at a time

- what I want to do is take a few use cases that have a high degree of semantic similarity and start ideating technical solutions, can you help me with that?

- lets start with AI to identify drug repurposing candidates, can you consolidate and summarize all of the use case summaries that relate to this?

- I want you to assume two conversational personas, one being an artificial intelligence expert, the other being an expert in drug development. I want you to have a constructive ideation conversation with these two personas, the role of the drug development expert is to identify the most common and challenges obstacles to drug repurposing, the role of the AI expert is to first listen to the challenges as the drug expert explains them and then offer potential AI solutions to drug repurposing, as the AI expert identifies solutions it is the drug expert's role to provide constructive feedback and criticism. The two personas should continue the process until the drug expert exhausts all possible known obstacles and the AI expert has identified a workable technical solution to all of the obstacles that the drug expert identifies

- this list of challenges and solutions is sufficient, now I want you to assume only the role of the AI expert and design a comprehensive system of systems that incorporates the technologies you described that will address all of the challenges that the drug expert raised be very through and describe the system architecture, system interfaces, data flows, input and output data formats, and finally describe an example user story of how the system would be used by ideal user

- explain how this solution would address the four use cases in the csv file

Walkthough:

https://www.youtube.com/watch?v=gqKTqK8D2Ms

IV. Practical Exercise

- Do it yourself! See if you can replicate our results, try some variations in your prompts, see what you get.

- Follow the steps above and share your results in the group

- Give feedback below

GovCon GPT Masterclass

31 lessons

Sign up for Rogue today!

Get started with Rogue and experience the best proposal writing tool in the industry.